The dream of truly autonomous vehicles, once confined to the realm of science fiction, is rapidly materializing into tangible reality. “Self-Driving Tech Breakthroughs” isn’t merely a fleeting headline; it encapsulates a profound and ongoing transformation within the automotive industry, promising to redefine not just how we travel, but also the very fabric of urban planning, logistics, and personal freedom. These advancements are driven by a convergence of cutting-edge artificial intelligence, sophisticated sensor technology, and an ever-increasing computational power, propelling us closer to a future where cars navigate themselves with minimal, or even zero, human intervention.

The Levels of Driving Automation

To fully appreciate the scope of “self-driving tech breakthroughs,” it’s essential to understand the Society of Automotive Engineers (SAE) International’s classification system for driving automation. This standardized framework provides clarity amidst the hype and helps delineate the capabilities and responsibilities at each stage:

A. Level 0: No Driving Automation: * The human driver performs all driving tasks, from steering and acceleration to braking. * Examples: Most traditional vehicles without any driver assistance systems.

B. Level 1: Driver Assistance: * The vehicle can assist with either steering OR acceleration/braking, but not both simultaneously. * The human driver is responsible for all other aspects of driving and must constantly supervise. * Examples: Adaptive Cruise Control (ACC) where the car adjusts speed to maintain distance, or Lane Keeping Assist (LKA) which provides minor steering corrections.

C. Level 2: Partial Driving Automation: * The vehicle can control both steering AND acceleration/braking simultaneously under specific conditions. * The human driver remains responsible for monitoring the environment and must be ready to intervene at any moment. This is where most advanced driver-assistance systems (ADAS) currently reside. * Examples: Tesla’s Autopilot, General Motors’ Super Cruise, Ford’s BlueCruise, which offer hands-on (or sometimes hands-off in specific conditions) driving assistance but require driver attention.

D. Level 3: Conditional Driving Automation: * The vehicle can perform all driving tasks under specific environmental conditions (e.g., highway driving, traffic jams). * The human driver is not required to monitor the environment constantly but must be ready to take over when prompted by the system, typically with a few seconds of warning. This is a critical transition point as it shifts some responsibility away from the human. * Examples: Mercedes-Benz DRIVE PILOT, which allows for hands-off, eyes-off driving in specific traffic jam situations in certain regions.

E. Level 4: High Driving Automation: * The vehicle can perform all driving tasks and monitor the driving environment under specific operational design domains (ODDs) – limited geographic areas or conditions. * The human driver is not expected to intervene. If the system encounters a situation it cannot handle within its ODD, it will perform a “minimal risk maneuver” (e.g., pull over safely) and request human assistance. No human input is needed within the ODD. * Examples: Robotaxis operating in geofenced areas (e.g., Waymo in Phoenix, Cruise in San Francisco).

F. Level 5: Full Driving Automation: * The vehicle can perform all driving tasks under all road and environmental conditions that a human driver could manage. * No human intervention is ever required. There is no steering wheel or pedals necessary. * Examples: This level is the ultimate goal but remains largely in the research and development phase for widespread deployment.

Pivotal Technologies Powering Self-Driving Breakthroughs

The progress in autonomous driving is fundamentally built upon several intertwined technological pillars that have seen exponential improvement:

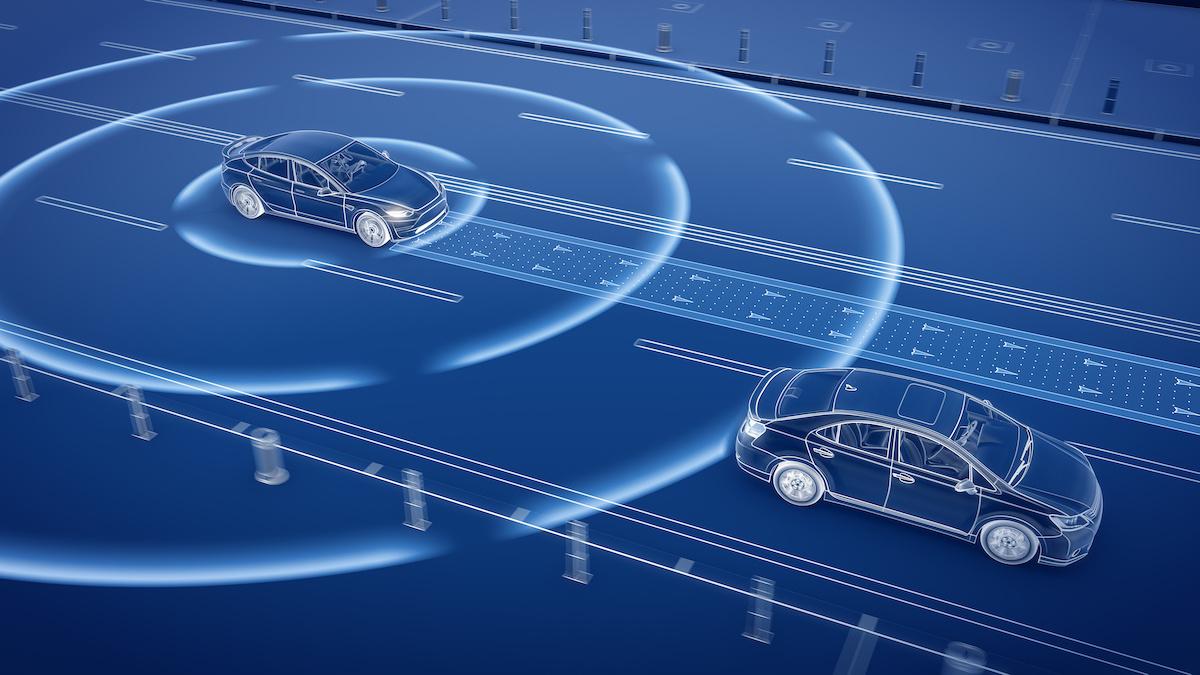

A. Sensor Fusion: * Autonomous vehicles rely on a suite of sensors to perceive their surroundings. No single sensor type is perfect in all conditions, so breakthroughs have come from effectively combining their data. * Lidar (Light Detection and Ranging): Emits pulsed laser light to measure distances and create highly detailed 3D maps of the environment. Excellent for depth perception and mapping, less affected by lighting than cameras. Recent breakthroughs in solid-state lidar have reduced cost and size. * Radar (Radio Detection and Ranging): Uses radio waves to detect objects and their speed, excelling in adverse weather conditions (fog, rain, snow) where optical sensors struggle. Crucial for long-range detection and velocity tracking. * Cameras (Vision Systems): Provide rich visual information, recognizing traffic lights, lane markings, signs, and identifying objects. Advances in high-resolution cameras and computer vision algorithms have made them incredibly powerful, especially for object classification and scene understanding. * Ultrasonic Sensors: Short-range sensors, primarily used for parking assistance, blind-spot monitoring, and low-speed object detection. * The Breakthrough: Sensor fusion algorithms take the raw, often noisy, data from all these sensors and combine it into a single, comprehensive, and accurate representation of the vehicle’s environment, creating a “perception stack.” This redundancy and complementary nature ensure robustness in various driving scenarios.

B. Artificial Intelligence (AI) and Machine Learning (ML): * The ‘brain’ of the self-driving car. AI, particularly deep learning and neural networks, processes the vast amounts of sensor data to make decisions. * Perception: AI models are trained on massive datasets of images and sensor readings to identify objects (cars, pedestrians, cyclists), understand their behavior, and predict their movements. * Prediction: ML algorithms analyze the perceived environment to anticipate the actions of other road users, crucial for safe navigation. * Planning: AI plans the vehicle’s trajectory, speed, and maneuvers based on perception, prediction, traffic laws, and desired destination, optimizing for safety, efficiency, and comfort. * The Breakthrough: Significant advancements in deep learning architectures (e.g., Convolutional Neural Networks for vision, Recurrent Neural Networks for prediction) and the availability of vast annotated datasets have enabled AI systems to achieve superhuman levels of perception and decision-making in specific domains. Reinforcement learning is also being explored to allow vehicles to learn optimal driving strategies through trial and error in simulated environments.

C. High-Definition (HD) Mapping: * Autonomous vehicles don’t just rely on real-time sensor data; they also use pre-built, highly detailed 3D maps that go beyond traditional GPS. * These maps include precise lane markings, traffic light locations, curb heights, road geometry, and even the exact position of signs and poles. * The Breakthrough: The development of efficient methods for creating and continuously updating these HD maps (often using specialized mapping vehicles or crowd-sourced data from fleets of autonomous vehicles) has been crucial. These maps provide a robust prior understanding of the environment, allowing the vehicle to localize itself with extreme precision and anticipate road features before they come into sensor view.

D. High-Performance Computing (HPC) and Edge Computing: * Processing gigabytes of sensor data per second and running complex AI algorithms in real-time requires immense computational power onboard the vehicle. * The Breakthrough: Miniaturization of powerful GPUs (Graphics Processing Units) and the development of specialized AI chips (Application-Specific Integrated Circuits or ASICs) optimized for neural network inference have allowed this compute power to be integrated into vehicles. This “edge computing” capability minimizes latency and ensures immediate decision-making.

E. Connectivity (V2X – Vehicle-to-Everything): * While not strictly necessary for basic autonomy, V2X communication is seen as a key enabler for further safety and efficiency enhancements. * Vehicle-to-Vehicle (V2V): Allows cars to communicate their speed, position, and intentions to each other, preventing collisions and enabling platooning (driving in close formation). * Vehicle-to-Infrastructure (V2I): Enables communication with traffic lights, road signs, and roadside units to optimize traffic flow, warn of hazards, and assist with navigation. * Vehicle-to-Pedestrian/Network (V2P/V2N): Connects vehicles to smartphones, wearables, or central cloud systems for enhanced awareness and services. * The Breakthrough: Advances in low-latency communication technologies like 5G and dedicated short-range communications (DSRC) are making V2X a viable component for future autonomous systems, creating a truly interconnected transportation ecosystem.

Key Breakthroughs and Milestones

The path to full autonomy is iterative, marked by significant advancements in specific applications and capabilities:

A. Robotaxi Deployment: Companies like Waymo (Google’s self-driving unit) and Cruise (GM’s subsidiary) have achieved Level 4 autonomy in limited operational design domains (ODDs) within specific cities. Their robotaxis now offer revenue-generating, driverless rides to the public in areas like Phoenix, San Francisco, and Austin. These deployments demonstrate the technical feasibility and safety of truly autonomous driving in complex urban environments, albeit under specific conditions.

B. Long-Haul Trucking Autonomy: The trucking industry is a prime target for autonomous technology due to the potential for efficiency gains, safety improvements, and addressing driver shortages. Companies like TuSimple, Aurora, and Plus have made significant strides in developing Level 4 autonomous trucking solutions for highway driving. These systems focus on “hub-to-hub” operations, with human drivers handling the first and last mile.

C. Last-Mile Delivery Bots: Smaller, slower autonomous vehicles are already performing last-mile delivery services in university campuses, residential areas, and even some public roads. These typically operate at lower speeds and in less complex environments, proving the viability of autonomous operations for specific tasks.

D. Advanced Driver-Assistance Systems (ADAS) in Production Vehicles: While not fully autonomous, the continuous improvement and widespread adoption of ADAS features like adaptive cruise control with lane centering, automatic emergency braking (AEB), and parking assist systems are critical milestones. They desensitize the public to automation and lay the groundwork for higher levels of autonomy by building a robust sensor suite and software stack.

E. Simulation and Digital Twins: Developing and testing autonomous vehicles in the real world is incredibly time-consuming and expensive. Breakthroughs in high-fidelity simulation environments and the creation of “digital twins” of real-world scenarios allow developers to test millions of miles in virtual environments, accelerating the development cycle and ensuring safety before real-world deployment.

The Impact of Self-Driving Technology

The implications of widespread autonomous driving extend far beyond just the act of driving:

A. Enhanced Safety: The vast majority of traffic accidents are caused by human error (distraction, fatigue, impairment). Autonomous vehicles, with their 360-degree awareness and rapid reaction times, have the potential to significantly reduce collisions, injuries, and fatalities on roads.

B. Increased Accessibility and Mobility: Autonomous vehicles could provide unprecedented mobility for individuals unable to drive due to age, disability, or lack of a license, opening up new opportunities for independence and social participation.

C. Optimized Traffic Flow and Reduced Congestion: Connected and autonomous vehicles could communicate with each other and with infrastructure to optimize traffic flow, eliminate phantom traffic jams, and reduce overall congestion, leading to shorter travel times and less wasted fuel.

D. Economic Transformation: * Logistics and Supply Chain: Autonomous trucks and delivery vehicles promise massive efficiency gains, lower operational costs, and 24/7 operation, transforming global logistics. * Ride-Hailing Services: Robotaxi fleets could drastically reduce the cost of ride-hailing, making it more affordable and accessible than private car ownership for many. * Real Estate and Urban Planning: Reduced need for parking spaces in cities could free up valuable land for housing, parks, or other amenities. Commute times might become less of a factor in where people choose to live.

E. New Business Models and Services: The advent of autonomous vehicles will spawn entirely new industries and services, from in-car entertainment and productivity tools to mobile retail and healthcare services delivered directly to the consumer.

F. Environmental Benefits: Smoother driving patterns, reduced congestion, and optimized routing can lead to lower fuel consumption (for ICE vehicles) and more efficient energy use (for EVs), contributing to reduced emissions. The widespread adoption of electric autonomous vehicles further amplifies these benefits.

Challenges on the Road Ahead

Despite the impressive breakthroughs, several significant hurdles remain before widespread Level 5 autonomy becomes a reality:

A. Regulatory and Legal Frameworks: Governments worldwide are grappling with establishing clear regulations for autonomous vehicles, including liability in case of accidents, licensing, and operational guidelines. Harmonization of these laws across different jurisdictions is crucial for scaling.

B. Public Acceptance and Trust: While initial excitement is high, gaining widespread public trust in autonomous systems is paramount. Accidents, even rare ones, can severely impact public perception and adoption rates. Educating the public about the technology’s capabilities and limitations is key.

C. “Edge Cases” and Unpredictable Scenarios: Autonomous vehicles perform exceptionally well in common driving situations. The challenge lies in handling “edge cases” – rare, unusual, or highly unpredictable scenarios (e.g., unexpected debris on the road, complex construction zones, unconventional human behavior) that are difficult to program for or train with sufficient data.

D. Cybersecurity: As vehicles become increasingly connected and software-dependent, they become targets for cyberattacks. Ensuring robust cybersecurity to prevent hacking, data breaches, and system manipulation is a critical and ongoing challenge.

E. Cost of Technology: While prices are falling, the advanced sensor suites, powerful computing platforms, and extensive R&D required still make Level 4/5 autonomous vehicles significantly more expensive than conventional cars. Mass production and economies of scale are needed to bring costs down.

F. Weather and Environmental Conditions: While radar and lidar perform better than cameras in adverse weather, extreme conditions like heavy snow, blinding rain, or thick fog can still challenge even the most advanced sensor suites, impacting reliability.

G. Ethical Dilemmas (“Trolley Problem”): In hypothetical unavoidable accident scenarios, how should an autonomous vehicle be programmed to make decisions that prioritize one life over another? While rare in practice, these ethical questions require careful consideration and societal consensus.

Conclusion

The trajectory of “Self-Driving Tech Breakthroughs” is undeniably upward. From the initial inklings of cruise control to the complex ballet of robotaxis navigating bustling city streets, each advancement brings us closer to a future where driving is safer, more efficient, and more accessible for everyone. While the road to full autonomy is still being paved, the remarkable progress witnessed in recent years suggests that a truly autonomous revolution is not just inevitable, but actively unfolding before our eyes, promising to reshape our world in ways we are only just beginning to imagine.